When heavy traffic hit my site during a recent product launch, I needed a robust way to ensure it wouldn’t crash. That’s when Python and Locust came to the rescue. Locust allowed me to simulate hundreds of users visiting key pages, revealing bottlenecks I hadn’t considered before. Here’s the exact method I used to identify and fix performance issues, ensuring my site stayed fast and reliable.

Installing Visual Studio Code

Before we get started, you’ll need Visual Studio Code (VS Code) installed. If you don’t have it yet, download it from Visual Studio Code and set it up on your machine. It’s a lightweight but powerful code editor that will help you manage your scripts efficiently.

Installing Locust

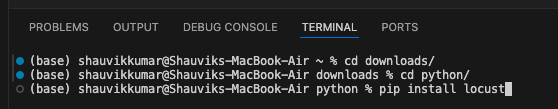

Locust is the Python library I used to simulate multiple users accessing my site simultaneously. To install Locust, follow these steps:

- Open VS Code, go to the terminal, and navigate to the folder where you want to install Locust.

- Type the following command:

pip install locust

This will install Locust, making it ready for use in your load testing.

Writing a Locust Script

Next, I wrote a Locust script to simulate users visiting my staging site. Here’s a simple script that simulates users loading the homepage and the blog section:

from locust import HttpUser, task, between

class WebsiteUser(HttpUser):

wait_time = between(1, 2.5) # Users wait between 1 to 2.5 seconds between tasks

@task

def load_homepage(self):

self.client.get("/")

@task

def load_posts(self):

self.client.get("/blog/") # Assuming '/blog/' is where your posts are listed. Adjust if different.

# Add more tasks as needed to simulate other user actions on your site

Here’s a breakdown of what each part does:

- HttpUser: This is the base class from Locust that helps simulate HTTP-based users.

- wait_time = between(1, 2.5): I set a randomized wait time between 1 and 2.5 seconds to simulate the think time users have between actions.

- @task: These methods represent actions that users perform, like loading the homepage or blog section.

You can adjust the paths (/ and /blog/) to match your own staging site’s URLs.Here’s what each part does:

Usage:

- When this script is run using Locust, it creates virtual users (WebsiteUser) who:

- Randomly wait between 1 and 2.5 seconds.

- Perform the tasks defined in the class (e.g., load the homepage or blog posts).

- You can adjust or add more tasks to simulate additional user interactions, such as logging in or submitting forms.

4. Navigating to the Correct Directory

Once I wrote the script, I saved it as load_test.py. The next step was to navigate to the directory where this script is saved. Use the following command to change the directory:

cd ~/Downloads/python/

5. Running the Load Test

Now comes the exciting part: running the load test!

Run the test using this command:

locust -f load_test.py --host=https://your-staging-site-url.com

Make sure to replace https://your-staging-site-url.com with your actual staging site URL.

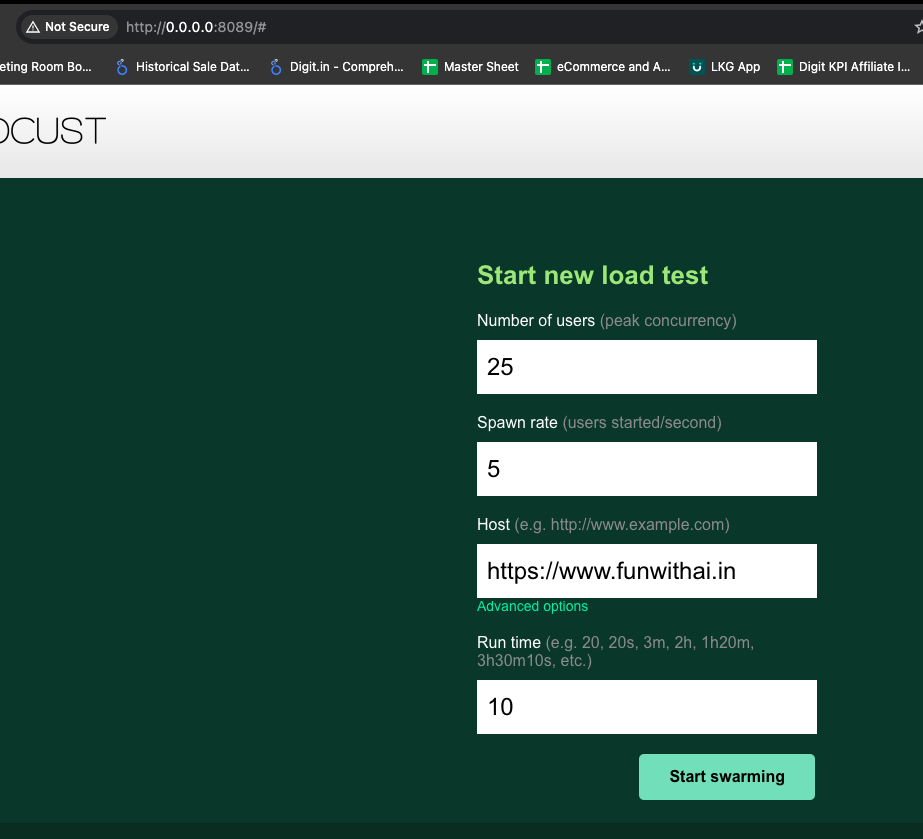

After running the command, open a browser and go to http://localhost:8089. This will open the Locust web interface, where you can specify the number of users and how quickly they should be spawned.

Load Test Example

Locust provides a web-based interface that allows you to easily interpret the results of your load test.

6. Bonus: Running Load Tests Only for Selected URLs

In some cases, you may want to test only specific URLs on your site, especially when you’re working with multiple endpoints. I made sure to simulate traffic on my most important pages to replicate real-world user behavior. Here’s how I modified my script to do that:

- Prepare a list of URLs you want to test. For instance, create a file named urls.txt and list one URL per line.

- Modify your script to read the URLs from urls.txt and test them accordingly:

from locust import HttpUser, task, between

class WebsiteUser(HttpUser):

wait_time = between(1, 2.5) # Users wait between 1 to 2.5 seconds between tasks

# Read the list of URLs from the file

with open('urls.txt', 'r') as f:

urls = [line.strip() for line in f.readlines()]

@task

def load_multiple_urls(self):

for url in self.urls:

self.client.get(url)

# If you want to add more specific tasks or behaviors, you can add them here

This will allow Locust to send requests to each of the URLs listed in urls.txt, simulating real user behavior across multiple pages.

Run the test again:

locust -f load_test.py --host=https://your-staging-site-url.com

Note: Ensure that the paths in urls.txt start with a / (e.g., /automated-seo/, /another-page/, etc.) and do not include the domain since the domain is specified in the --host parameter of the locust command.

Remember, when load testing with multiple URLs, it’s essential to monitor server health and be prepared to stop the test if necessary.

Before you look into your data, here’s a breakdown of the key metrics and what they mean:

- Total Requests: The total number of HTTP requests made during the test.

- Total Failures: The number of failed HTTP requests. A high number here indicates potential issues with your website under load.

- Requests/s: The average number of HTTP requests made per second.

- Failures/s: The average number of failed HTTP requests per second.

- Users: The current number of simulated users.

- Response Time (ms):

- Median: 50% of the requests were faster than this value, and 50% were slower. It’s a good measure of “average” performance.

- Average: The average response time of all requests.

- Min/Max: The fastest and slowest response times recorded.

- Content Size: The average size of the HTTP response content.

- Request Distribution:

- 50%: 50% of all requests were faster than this value.

- 66%: 66% of all requests were faster than this value.

- 75%: 75% of all requests were faster than this value.

- 80%, 90%, 95%, 98%, 99%, 100%: These percentiles give you a sense of the distribution of request

Request Statistics

Here is what i got for my site:

- GET /:

- # Requests: 111 requests were made to the homepage.

- # Fails: 2 requests failed.

- Average (ms): On average, it took 14,776 milliseconds (or about 14.8 seconds) to get a response.

- Min (ms): The fastest response time was 214 milliseconds.

- Max (ms): The slowest response time was 50,785 milliseconds (or about 50.8 seconds).

- Average size (bytes): The average size of the response was 359,180 bytes (or about 359 KB).

- RPS: The system handled an average of 1.9 requests per second.

- Failures/s: There were 0.0 failures per second (which is good, as it’s very low).

- GET /automated-seo/:

- Similar metrics as above, but for the

/automated-seo/endpoint. Notably, the average response time is 17,375 milliseconds (or about 17.4 seconds), and there were 7 failed requests.

- Similar metrics as above, but for the

- Aggregated:

- This section provides a combined view of the above two endpoints. The average response time for all requests was 16,002 milliseconds (or about 16 seconds).

Response Time Statistics

Here is the percentiles for response times, which will help you understand the distribution of response times:

- GET /:

- 50%ile (ms): Half of the requests to the homepage were faster than 4,500 milliseconds (or 4.5 seconds), and half were slower.

- The 90%ile indicates that 90% of the requests were faster than 40,000 milliseconds (or 40 seconds), and the remaining 10% took longer.

- GET /automated-seo/:

- Half of the requests to

/automated-seo/were faster than 7,000 milliseconds (or 7 seconds). - The 90%ile indicates that 90% of the requests were faster than 41,000 milliseconds (or 41 seconds).

- Half of the requests to

- Aggregated:

- Combined percentiles for both endpoints.

Interpretation

- The average response times are quite high, especially for the homepage, which took almost 15 seconds on average. Ideally, web pages should load in under 3 seconds for a good user experience.

- The

/automated-seo/endpoint also has a high average response time, and there were 7 failed requests, which might indicate some issues under load. - The percentiles show that as the load increases, the response times also increase significantly. For instance, while the 50th percentile for the homepage is 4.5 seconds, the 90th percentile jumps to 40 seconds.

Key Recommendations

- Optimize Server Performance: Check if the server resources (CPU, RAM, Disk I/O) are adequate. Consider upgrading the hosting plan or optimizing server configurations.

- Optimize Website Content: Large images, scripts, or other assets can slow down page load times. Consider using caching, optimizing images, and minifying scripts.

- Database Optimization: Slow database queries can be a bottleneck. Consider optimizing database queries, using a database cache, or optimizing the database structure.

- Content Delivery Network (CDN): Using a CDN can help distribute the load and serve content faster to users from various locations.

- Error Investigation: Investigate the failed requests to determine the cause of the failures.

- Further Testing: Consider running more tests with different user scenarios, varying the number of users, or testing other endpoints.

Remember, while load testing provides valuable insights, it’s essential to combine these findings with other monitoring and diagnostic tools to get a comprehensive view of your website’s performance.

Conclusion: Load testing is an essential step in ensuring your website can handle traffic surges, whether it’s from a marketing campaign or unexpected spikes. By following the steps in this guide, I was able to identify and address bottlenecks on my own site, improving performance under load.

Disclaimer: Load testing can put significant stress on your server and might cause issues if the server is not equipped to handle the load. Always monitor server health during testing and be prepared to stop the test if necessary.

Stay productive, stay creative, and most importantly—have fun with AI!